Nicholas Carah, The University of Queensland and others.

Once upon a time, most advertisements were public. If we wanted to see what advertisers were doing, we could easily find it – on TV, in newspapers and magazines, and on billboards around the city.

This meant governments, civil society and citizens could keep advertisers in check, especially when they advertised products that might be harmful – such as alcohol, tobacco, gambling, pharmaceuticals, financial services or unhealthy food.

However, the rise of online ads has led to a kind of “dark advertising”. Ads are often only visible to their intended targets, they disappear moments after they have been seen, and no one except the platforms knows how, when, where or why the ads appear.

In a new study conducted for the Foundation for Alcohol Research and Education (FARE), we audited the advertising transparency of seven major digital platforms. The results were grim: none of the platforms are transparent enough for the public to understand what advertising they publish, and how it is targeted.

Why does transparency matter?

Dark ads on digital platforms shape public life. They have been used to spread political falsehoods, target racial groups, and perpetuate gender bias.

Dark advertising on digital platforms is also a problem when it comes to addictive and harmful products such as alcohol, gambling and unhealthy food.

In a recent study with VicHealth, we found age-restricted products such as alcohol and gambling were targeted to people under the age of 18 on digital platforms. At present, however, there is no way to systematically monitor what kinds of alcohol and gambling advertisements children are seeing.

Advertisements are optimised to drive engagement, such as through clicks or purchases, and target people who are the most likely to engage. For example, people identified as high-volume alcohol consumers will likely receive more alcohol ads.

This optimisation can have extreme results. A study by the Foundation for Alcohol Research and Education (FARE) and Cancer Council WA found one user received 107 advertisements for alcohol products on Facebook and Instagram in a single hour on a Friday night in April 2020.

How transparent is advertising on digital platforms?

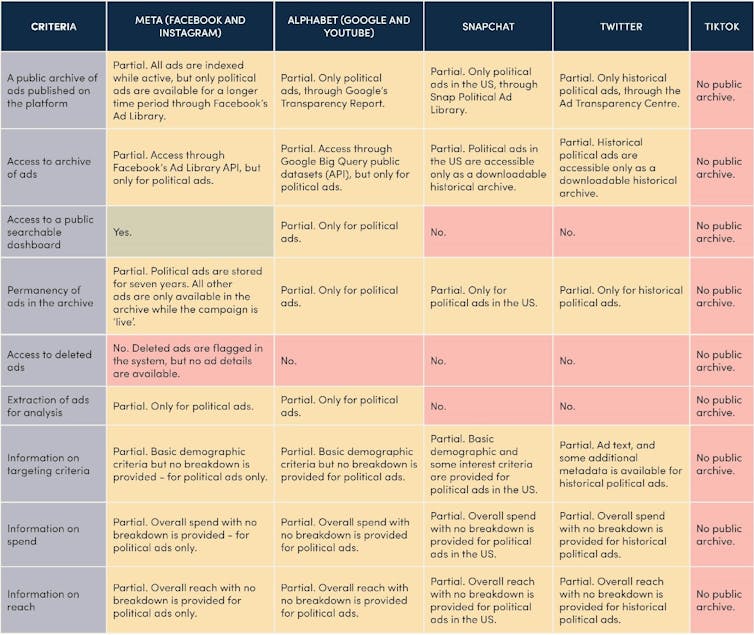

We evaluated the transparency of advertising on major digital platforms – Facebook, Instagram, Google search, YouTube, Twitter, Snapchat and TikTok – by asking the following nine questions:

- is there a comprehensive and permanent archive of all the ads published on the platform?

- can the archive be accessed using an application programming interface (API)?

- is there a public searchable dashboard that is updated in real time?

- are ads stored in the archive permanently?

- can we access deleted advertisements?

- can we download the ads for analysis?

- are we able to see what types of users the ad targeted?

- how much did it cost to run the advertisement?

- can we tell how many people the advertisement reached?

All platforms included in our evaluation failed to meet basic transparency criteria, meaning advertising on the platform is not observable by civil society, researchers or regulators. For the most part, advertising can only be seen by its targets.

Notably, TikTok had no transparency measures at all to allow observation of advertising on the platform.

Other platforms weren’t much better, with none offering a comprehensive or permanent advertising archive. This means that once an advertising campaign has ended, there is no way to observe what ads were disseminated.

Facebook and Instagram are the only platforms to publish a list of all currently active advertisements. However, most of these ads are deleted after the campaign becomes inactive and are no longer observable.

Platforms also fail to provide contextual information for advertisements, such as advertising spend and reach, or how advertisements are being targeted.

Without this information, it is difficult to understand who is being targeted with advertising on these platforms. For example, we can’t be sure companies selling harmful and addictive products aren’t targeting children or people recovering from addiction. Platforms and advertisers ask us to simply trust them.

We did find platforms are starting to provide some information on one narrowly defined category of advertising: “issues, elections or politics”. This shows there is no technical reason for keeping information about other kinds of advertising from the public. Rather, platforms are choosing to keep it secret.

Bringing advertising back into public view

When digital advertising can be systematically monitored, it will be possible to hold digital platforms and marketers accountable for their business practices.

Our assessment of advertising transparency on digital platforms demonstrates that they are not currently observable or accountable to the public. Consumers, civil society, regulators and even advertisers all have a stake in ensuring a stronger public understanding of how the dark advertising models of digital platforms operate.

The limited steps platforms have taken to create public archives, particularly in the case of political advertising, demonstrate that change is possible. And the detailed dashboards about ad performance they offer advertisers illustrate there are no technical barriers to accountability.

Nicholas Carah, Associate Professor in Digital Media, The University of Queensland; Aimee Brownbill, Honorary Fellow, Public Health, The University of Queensland; Amy Shields Dobson, Lecturer in Digital and Social Media, Curtin University; Brady Robards, Associate Professor in Sociology, Monash University; Daniel Angus, Professor of Digital Communication, Queensland University of Technology; Kiah Hawker, Assistant researcher, Digital Media, The University of Queensland; Lauren Hayden, PhD Candidate and Research Assistant, The University of Queensland, and Xue Ying Tan, Software Engineer, Digital Media Research Centre, Queensland University of Technology

This article is republished from The Conversation under a Creative Commons license. Read the original article.